Advanced SQL: Querying a Business PostGreSQL Database with SQLAlchemy Core

PostGreSQL + SQLAlchemy Core Hands-on Practice

Tech Stack

Python 3,

PostGreSQL, SQLAlchemy (Core),

VsCode, Jupyter, GitHub

Project

Execution of complex SQL queries and best practices demonstrating practical professional usage.

Benefits

PostGreSQL databases can be securely analyzed in a local Python environment using SQLAlchemy thanks to its automatic parameterization. The Core approach enhances efficiency with its declarative, Pythonic design.

Goals

Enhancing my SQL and SQLAlchemy skills.

I spent 9 hours divided this way and in this order:

7 hrs:

coding the queries

exploring and analysing the data through a few visualizations

giving my observations and insights

2 hrs:

reviewing code, reviewing the notebook outline, cleaning up

Introduction: SQLAlchemy Efficiency Through Python

You can find the full notebook and more queries in my Data Analyst GitHub repository.

For another hands-on practice project example, read my previous SQLAlchemy Core article where I started this whole project of exploring and querying this business PostgreSQL dvdrental database. So the link above is to the part 2 of the project, and this is its article here.

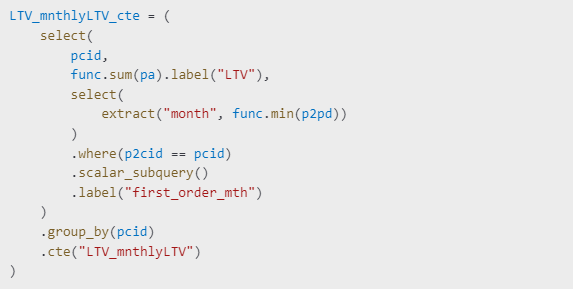

In the world of data analytics and database querying, efficiency is paramount. SQLAlchemy, a powerful Python toolkit, enhances this efficiency by allowing reusable and structured queries. By leveraging Common Table Expressions (CTEs) and subqueries, we can write complex queries without redundancy. This not only saves time but also makes the code easier to read, maintain, and debug.

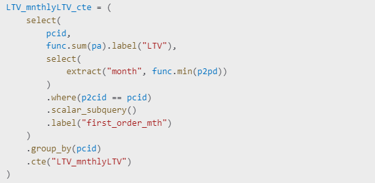

For instance, in my exploration of the dvdrental PostgreSQL database, I frequently reused queries via the .cte() and .subquery() methods. Here’s an example:

This approach eliminates code duplication and makes queries more readable. I could then reuse this LTV_mnthlyLTV_cte in another query without having to redefine its logic.

Advanced Querying: Filtering with Subqueries and Efficient Self-Joins

Filtering with subqueries is often preferable to using multiple WHERE clauses because it improves performance and makes queries more modular. Instead of applying multiple filters separately, subqueries encapsulate filtering logic, making it more structured.

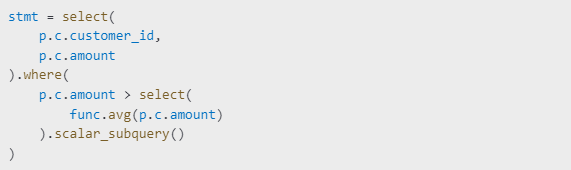

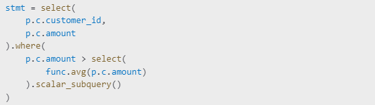

For example, instead of filtering with separate WHERE clauses:

The inline subquery ensures that only customers with an amount greater than the average are returned without needing an additional join or filter.

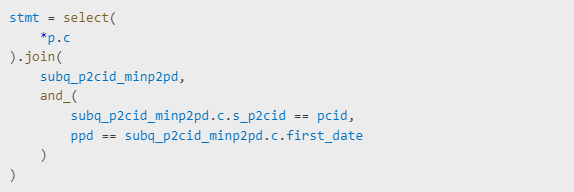

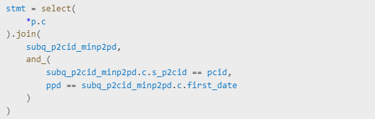

Similarly, self-joins on multiple columns should be done in a single join clause instead of multiple ones. This prevents redundancy and enhances query efficiency. Here’s an example where I performed a self-join on the payment table:

This approach ensures that I retrieve only the first order for each customer in a single join operation rather than making multiple queries.

Introduction to Window Functions: A Smarter Approach

Window functions are powerful tools that allow calculations across a set of table rows related to the current row. Unlike aggregates, they do not collapse rows, allowing for better insights while preserving row granularity.

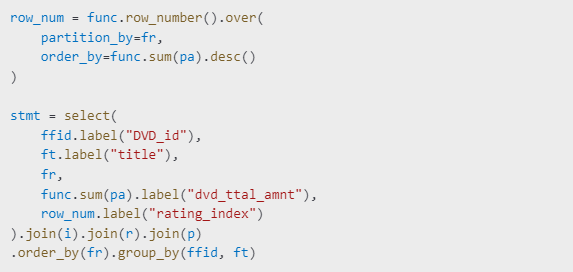

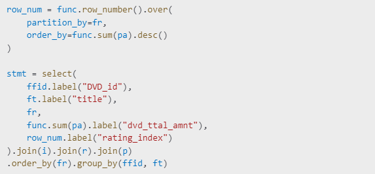

Using row_number() Instead of Multiple CTEs

Instead of using multiple CTEs to partition data and get a ranking, the row_number() function lets us assign an index to each row based on a partitioned and ordered set. This allows us to retrieve the top movie by rating in a much cleaner way:

This eliminates the need for multiple CTEs, making the query faster and more concise.

Importance of Refactoring Code: The Power of Functions

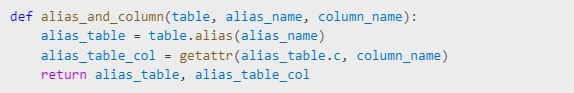

Refactoring code into functions is crucial for maintainability and reusability. When working with SQLAlchemy, repeatedly writing the same aliasing or aggregation logic leads to redundancy.

For example, I refactored the aliasing of tables into a function:

With this function, instead of repeatedly aliasing tables manually, I could simply call:

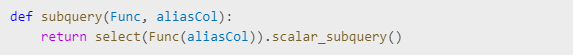

Similarly, for subqueries, I refactored:

This approach significantly improved readability and reusability.

Conclusion: Leveraging SQLAlchemy for Business Insights

This deep dive into advanced SQL querying with SQLAlchemy was both challenging and rewarding. By leveraging my previous SQL practice, I was able to transition smoothly into CTEs, subqueries, window functions, and self-joins in SQLAlchemy Core.

When to Use SQLAlchemy Over Raw SQL

SQLAlchemy shines in specific use cases:

Data Movement: When transferring data between databases efficiently.

E-Commerce Applications: Dynamic querying for user transactions, inventory tracking, and analytics.

Machine Learning Pipelines: Preparing and preprocessing structured data before modeling.

However, for pure EDA (Exploratory Data Analysis) and visualization, DuckDB would have been a better choice. DuckDB:

Processes data in-memory, making it much faster for large queries.

Has seamless integration with Pandas, avoiding the need for .execute() calls.

Does not require SQLAlchemy overhead, making queries simpler for analysis.

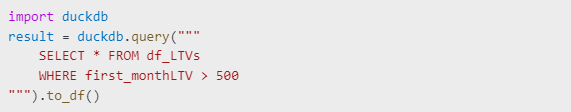

For example, I could have used DuckDB to perform in-memory SQL queries directly on a Pandas dataframe:

This makes EDA workflows much faster than SQLAlchemy + Pandas combined.

Overall, this experience reinforced the value of leveraging the right tools for the right tasks. SQLAlchemy was excellent for structured querying, while DuckDB would have accelerated data exploration and visualization. Moving forward, I will integrate both tools depending on the use case.

💡 If you want to explore my full SQLAlchemy journey, check out my GitHub repository.